Tracking Contacts – Results

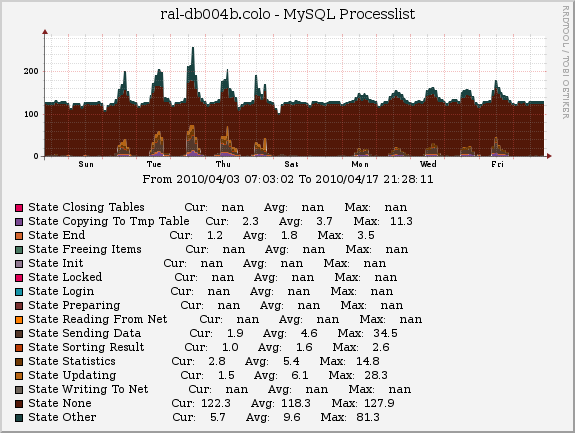

Sometimes the best way to speed something up is to slow other things down. We’d been having issues of overloaded database connections due to queries running too slowly on one of our shards/engines. We discovered that one of the major challenges creating heavy load on our database servers was all the subscribers opening and clicking the messages sent by the users on that shard. Especially when a large client would send out an email blast, we’d get a large surge of opens and clicks and struggle to handle them all at the same time.

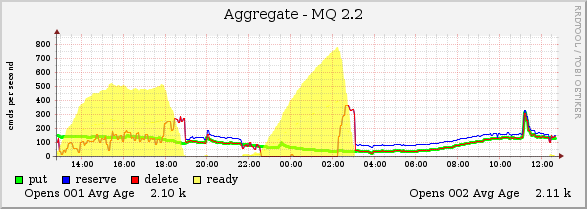

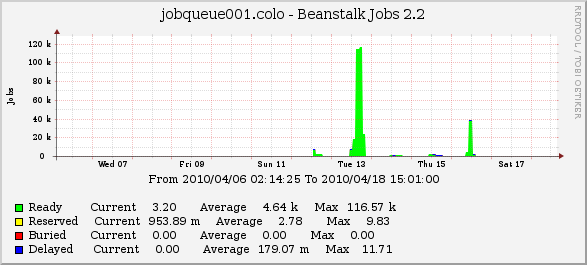

We worked to change opens and clicks to process in the background using what’s called a Message Queue in between. The side effect is that sometimes we’ll see a slight delay in processing opens and clicks. We’ll be monitoring the latency of the opens clicks.

The week after we deployed this change, we started to see the benefit immediately. The load for the databases decreased, and the opens and clicks buffered as designed. During the first few days we saw as much as 30-45 minute delays in processing during some high traffic periods. However, we saw no issues in the DB4 cluster which we’d been seeing consistent issues for weeks. While these delays aren’t ideal, the fact that this cluster of opens and clicks didn’t bring down the database servers was a beautiful new trend.

Here you can see some of the buildup of opens and clicks during the first week, which created some processing delays for customers that weren’t within our tolerance, but we fixed those the following day by increasing the number of threads we had processing. These days we see much lower queues, and only during very peak times do we see delays of > 1 minute.

Here’s the number of connections during the two week period before and after this was released. You can see a decrease in the active connections and far less spikes in connections for the machine. This means more responsiveness to customers and no running out of connections!

And a side benefit is that last week when we were experiencing database downtime, due to some corruption. During this potential disaster, the silver lining was that we were able to build up a queue of 800,000 opens and clicks with delay being the only customer-impact. In previous instances, we would have had to reap the Apache logs to process these opens and clicks.